Copyright © 2007-2018 Russ Dewey

Operant Conditioning

Pavlov had many early admirers in America. John B. Watson, who gave behaviorism its name, studied Pavlovian conditioning early in the 20th Century.

However, by the 1940s and 1950s American psychologists spent most of their time studying operant conditioning, a form of learning distinct from Pavlov's. Operant conditioning does not involve triggering reflexes. Operant conditioning involves exploratory or goal-seeking actions and their consequences.

Many of today's students have heard about operant conditioning. It is typified by the rat in a Skinner Box (named after B. F. Skinner, who coined the term operant conditioning in 1938). The rat presses a lever that sticks out from the side of the cage. The goal is to obtain food reinforcement.

Distinguishing Between Operant and Classical Conditioning

Here are some contrasts between classical and operant conditioning.

| Classical Conditioning | Operant Conditioning |

| A signal is placed before a reflex | A reinforcing or punishing stimulus is given after a behavior |

| Developed in Russia | Developed in U.S. |

| Known as "Pavlovian" | Known as "Skinnerian" |

| Also called "respondent conditioning" | Also called "instrumental conditioning |

| Works with involuntary behavior | Works with voluntary behavior |

| Behavior is elicited | Behavior is emitted |

| Typified by Pavlov's dog | Typified by Skinner Box |

How does operant conditioning contrast with Pavlovian or classical conditioning? Classical conditioning always involves anticipatory biological responses triggered by a signal. The response is drawn out of the organism or elicited.

In operant conditioning, by contrast, the animal generates behavior on its own, as a way of achieving a goal. The behavior is emitted rather than elicited.

Operant conditioning always involves behavior, which is basically the same thing as activity. Until the modern era, when inner speech was declared a behavior (by Cognitive Behavioral Therapists) behavior generally meant motor behavior or physical action.

A behavior reinforced or punished in operant conditioning is called an operant. Classically conditioned responses are more likely to include emotions or glandular responses.

Biologically, classical conditioning can involve motor responses. However, as we have seen, many important Pavlovian responses activate the autonomic nervous system: the division of the nervous system responsible for gut reactions and emotions.

Memory for classically conditioned responses occurs throughout the nervous system at the neural level, while memory for patterns of operant responses (i.e. complex non-instinctive behavior) typically requires the hippocampus, the part of the brain responsible for event memory.

What are differences between classical and operant conditioning?

The two forms of conditioning are intermingled within living organisms. They are not always conceptually distinct even to human psychologists. Discussions of whether classical and operant conditioning represent variations of the same underlying process can get quite complex.

For the beginning student, the challenge is to tell these two forms of conditioning apart. Usually the easiest way to do that is to look for a reflex: a biological, born-in stimulus-response circuit.

If a reflex is activated by a signal, then one is talking about classical conditioning. If the animal is engaging in something like exploratory or strategic activity, followed by a payoff or a punishment, one is talking about operant conditioning.

Technically, operants are defined as a class of behaviors that produce the same outcome in the environment. For example, a pigeon pecks a key. That is a key peck operant, because the pigeon operates on the environment and the result is a key-press.

Even if a pigeon were to kick the key with its foot instead of pecking it, the operant would be the same. (At least, this is how Skinner defined operants.) An operant is defined by its effects.

What is a formal definition of operant?

Perhaps the most-studied operant in American psychology is the bar-press response. A rat presses down a little bar sticking out from the side of a metal box called an operant chamber.

Any behavior that results in the bar being pressed, whether the rat does it with its paw or its nose, is defined as the same operant. That is because the effect on the environment (the bar being pressed) is the same.

Operant conditioning is also called instrumental conditioning, because the animal uses its behaviors as instruments to pursue a goal. Behaviors are emitted as a way of operating on the environment, and that is where operants got their name.

Starting Out in a Rat Lab

For half a century, from about 1940 to 1990 depending on the school, psychology students in the U.S. learned about operant conditioning in classes with titles like Conditioning and Learning or Animal Learning Laboratory. Usually this involved a so-called "rat lab."

Such courses still exist at some universities. However, they involve live animals, so they are expensive. Also, there are many regulations now to insure humane care of laboratory animals. Consequently, many psychology departments are doing without such courses.

Where the courses still exist, they may be cross-listed between psychology and biology departments. Operant conditioning is very useful to biologists studying animals. It is the best way to induce laboratory animals to do what a human wants them to do.

Students who sign up for a rat lab often wonder if they will be repulsed by handling rats, but they end up enjoying them. Rats (when socialized since birth) are intelligent creatures capable of affectionate interactions with humans.

Many a retired rat lab rodent lives out its brief life (2-3 years) as a human pet. During its career in the lab, the rat serves as an ideal subject for studying learning under controlled conditions.

Often the first thing students do in a rat lab is handle the rat. This helps the rat become accustomed to human contact and not be too anxious to perform, during future experimental sessions. Same with the human.

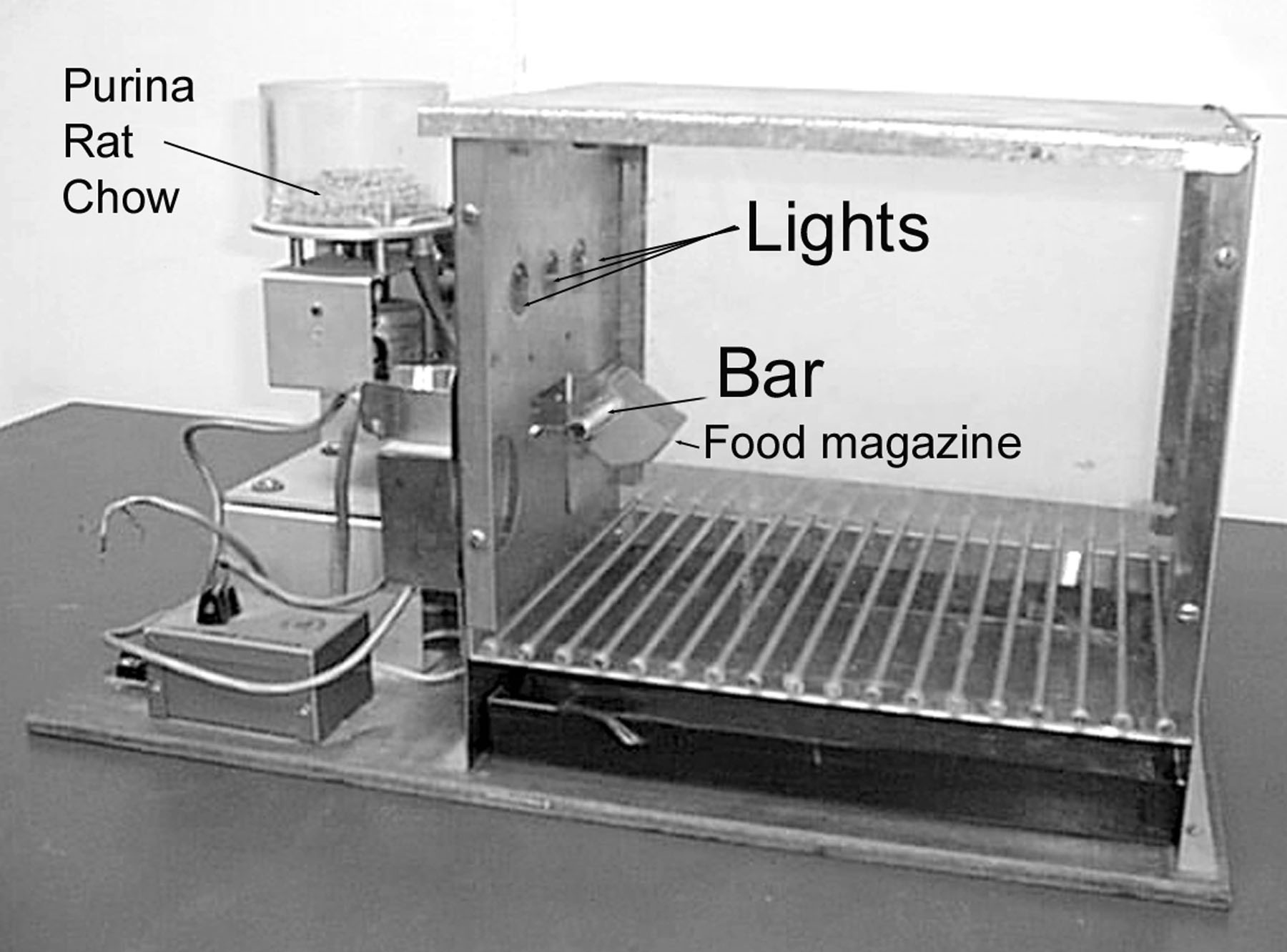

The next task might be to observe the rat in the operant chamber, a little cage where conditioning takes place. A typical operant chamber is shown here.

The operant chamber or Skinner Box for a rat

Researchers can put a light or buzzer on the wall. They dispense pellets using a remote controller. Pellets are released one at a time from an automated dispenser.

A lever (labeled "bar") sticks out of the wall of the operant chamber. This is the switch that the rat learns to press to get food. The food might be Purina Rat Chow or some other commercial rat food formed into little pellets.

What are typical features of an operant chamber?

If you were a student starting out in the rat lab, your first assignment might be to watch a rat in an operant chamber and write down all its behaviors for fifteen minutes. If the rat presses the bar or performs any other distinctive behavior, you make note of it.

The rat might not press the bar at all, or it might press the bar a few times while exploring the cage. Rats are inquisitive animals. The unreinforced rate of a response is the baseline level of responding.

Next comes a procedure called magazine training. (Magazine in this context means storage area.) Each cage has a food cup, the magazine, where food pellets are delivered.

The researcher can press a button on a remote control unit to deliver a food pellet at any time. To teach the rat where this food is delivered, a student can moisten a few pellets of food to increase their odor, rub them on the hopper, and leave a few pellets in the hopper.

The rat, if deprived of food overnight, will go toward the odor of the moistened pellet. It finds the pellets in the hopper and consumes them.

Now the rat knows where to find food. After the food is consumed, the rat starts exploring the cage looking for more food.

The student in the rat lab waits until the rat is near the food magazine, preferably facing it. Then the student presses a remote control button. A dry food pellet drops into the metal dish with a clattering sound.

What does a rat learn during magazine training?

The rat quickly learns that a certain stimulus (the sound of a dry food pellet clattering into a bowl) signals availability of food. The rat emits a response (running to the food hopper) that will be reinforced when the rat finds food there and eats it.

When a behavior is reinforced, that means it is followed by a stimulus that makes the behavior more frequent or probable in the future. In this case, the food reinforces the rat for going to the food bowl when it hears a pellet drop.

Now a student might start an operant conditioning procedure called shaping, also called handshaping and the method of successive approximations. Shaping is a way to get an animal to perform a new behavior.

The goal behavior, the one the rat is supposed to learn, is called a target behavior. The first target behavior in the operant lab, other than running to the food bowl, is the bar-press. This is, of course, the act of pushing down the little rounded metal bar protruding from the side of the operant chamber.

How is a rat shaped to press the bar?

The method of successive approximations or shaping consists of reinforcing small steps toward the target behavior. First the student gives the rat a food pellet when it is anywhere near the little metal bar.

The rat learns this and it stays near the bar. Then the rat may be required to touch the bar before it receives a food pellet. (It is likely to touch the bar at some point, if it is hungry and exploring.)

Finally, it is required to press the bar to receive a food pellet. This process of reinforcing gradual changes toward a target behavior is what is labeled shaping or the method of successive approximations.

The method of successive approximations is very useful in dog obedience training or any other situation where animals must learn something new. We will discuss shaping more (using the example of teaching a dog to catch a frisbee) in the later section on applied behavior analysis.

Write to Dr. Dewey at psywww@gmail.com.

Don't see what you need? Psych Web has over 1,000 pages, so it may be elsewhere on the site. Do a site-specific Google search using the box below.