Copyright © 2007-2018 Russ Dewey

Expertise and Domain Specific Knowledge

An expert is a person with extensive knowledge about a particular subject matter or area of expertise. Much problem solving involves domain-specific knowledge.

That is knowledge relevant to a certain domain: a situation or class of problems. Domain-specific knowledge is what makes an expert an expert.

What distinguishes an expert? How does this relate to domain-specific knowledge?

Each environment requires its own sort of expertise. Living "on the street" as a homeless person in a major city requires a repertoire of behaviors and knowledge most of us lack.

The same could be said of running a high-energy physics lab, or running a successful car dealership, or playing a musical instrument. Virtually any area of human accomplishment requires knowledge unique to particular domain.

Domain-specific knowledge allows the expert to do things that baffle or frustrate a beginner. The expert makes it look easy, but the expert achieves this behavior only after long hours of practice.

How is expertise accumulated?

Experts may invest tens of thousands of hours in developing domain-specific knowledge. Chase and Simon (1973) estimated that chess experts spend up to 50,000 hours learning to play chess.

That works out to 4 hours of chess a day for over thirty years. Simon and Gilmartin (1973) estimated that, as a result of this experience, the chess expert can recognize between 10,000 and 100,000 distinct patterns of chess pieces on a chessboard.

What did DeGroot discover?

A huge vocabulary of chess configurations (tactically important positions on a chess board) enables the expert to grasp the layout of a chessboard almost immediately. Some chess masters can play 20 chess games simultaneously, moving from board to board, winning almost all the games.

In his dissertation, DeGroot showed that chess masters had a fantastic memory for chess positions. Masters were able to reconstruct an entire chessboard full of positions after about 5 seconds of inspection.

What did Chase and Simon show, in research following up on DeGroot's study?

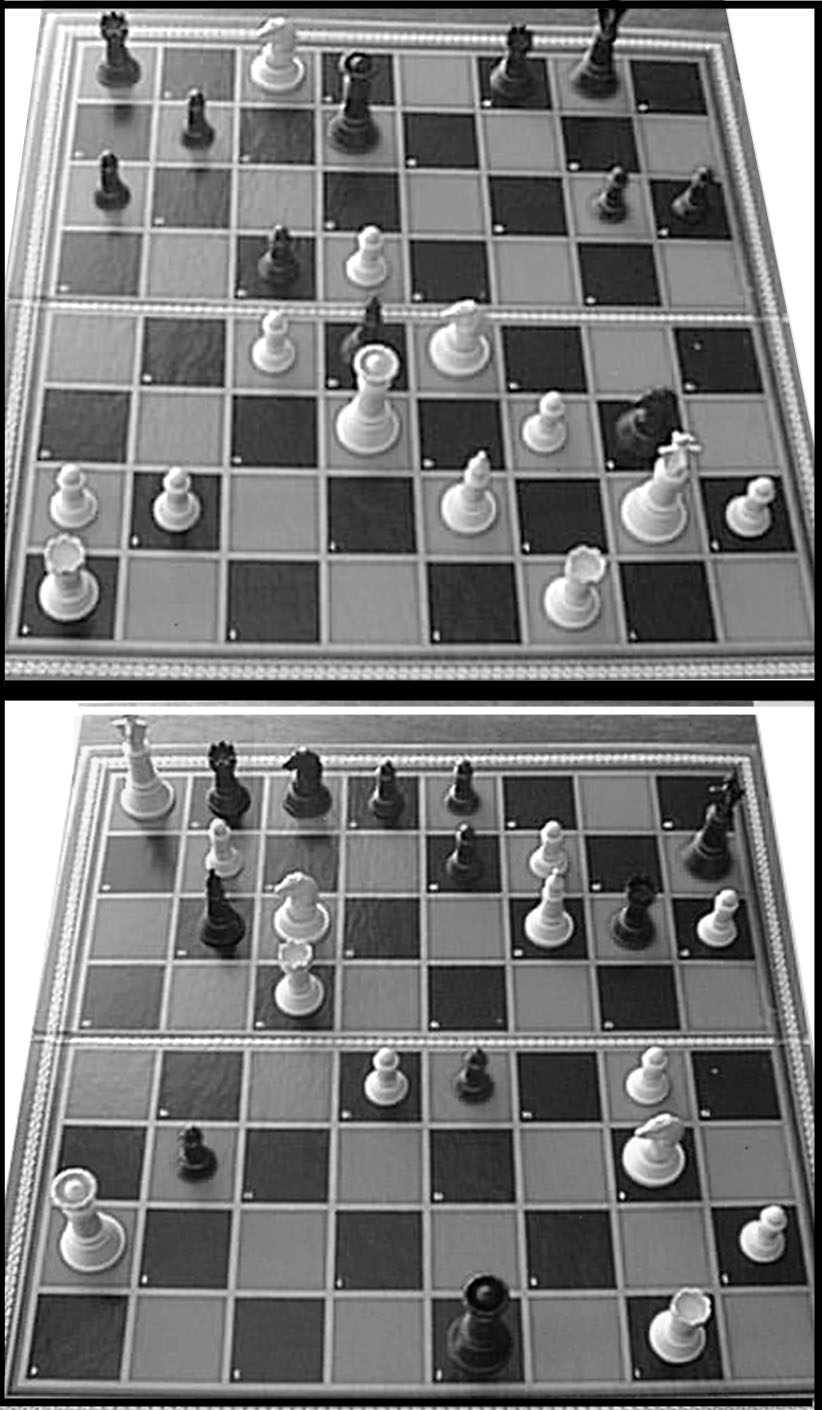

Chase and Simon (1973) did follow-up research in which they tested experts and beginning players with randomly placed pieces. With random positions (those not valid for chess) experts were no better than beginners at memorizing board positions.

With meaningful positions, the experts were much better than beginners. The difference is shown in the figure below. An actual mid-game configuration is on the top, a random configuration is on the bottom.

A meaningful configuration (top) and a random configuration (bottom)

To the expert chess player there is a big difference, and only the configuration on top is easy to memorize. To the non-player, neither picture shows meaningful patterns, so either can be memorized equally well.

An expert not only learns thousands of patterns and rules; the expert also knows when to break rules. Experts know about odd patterns that come up once in a blue moon. For an expert, the ability to recognize when not to apply a common rule is almost as important as knowing the rules in the first place.

In addition to learning thousands of patterns and rules, the expert must learn... what?

As noted above, Chase and Simon (1973) estimated that chess experts spent up to 50,000 hours learning to play chess. Ericsson, Krampe, and Tesch-Romer (1993) repeatedly cited the figure of ten years of experience as a typical requirement for expertise.

These reports were converted into the popular claim that "10,000 hours of experience doing anything will make you an expert." Malcolm Gladwell made this claim in a 2008 book titled Outliers.

Outliers are extraordinarily talented people in any field. Gladwell claimed that such individuals had one big thing in common: they accumulated about 10,000 hours of experience in their respective fields (which works out to 90 minutes a day for 20 years).

Ericsson (2012) protested that his research had been mischaracterized. He never used the "10,000 hour rule" himself, and in fact, his original research showed that half the expert violinists he studied spent 5,000 hours or less in practice.

What did Ericsson say about the 10,000 hour rule?

...Our research has never been about counting hours of any type of practice. In fact, it is now quite clear that the number of hours of merely engaging in activities, such as playing music, chess and soccer, or engaging in professional work activities has a much lower benefit for improving performance than deliberate practice.

Ericsson's research was about deliberate practice aimed at refining a skill. The amount required for expertise in a field varies greatly. Some pianists log upwards of 20,000 hours of deliberate practice. 1000 hours may be more than enough to train students to be expert memorizers (for example).

The grain of truth in Gladwell's claim is that experience matters. Domain-specific knowledge and skills make a person more expert in any field. People who invest thousands of hours in deliberate practice of any skill are likely to be "outliers," as Gladwell pointed out, simply because most people will not make that kind of investment.

Expert Systems

Expert systems are computer programs that mimic the special knowledge of human experts. Expert systems are constructed by converting knowledge into if-then rules. A beginner can then make decisions like an expert, by answering a series of simple questions.

An early, successful example of an expert system was the MYCIN system. It was intended to help doctors diagnose medical conditions and find appropriate treatments.

MYCIN asks about the patient's symptoms. It guides the doctor through a series of diagnostic tests, ultimately recommending a medicine or treatment for the disorder.

What are expert systems?

Expert systems running on computers have succeeded in a variety of domains. They have located oil deposits for oil companies. They can guide stock purchases. They diagnose car repair problems. They are used in shopping mall kiosks to aid people matching make-up colors to hair or clothes.

Why did early dreams of making money on expert systems fail to come true?

When expert systems were a new concept, in the early 1980s, investment companies saw great commercial potential in them. Many new companies were formed, expecting to make a fortune by generating computer-based expertise. Then the bubble burst.

By the late 80s there was a shake-out and many of the companies formed earlier in the decade failed. Part of the problem was that expert systems performed erratically (see the discussion of brittleness below.)

Another problem was that expert systems turned out to be cheap to produce. A program for helping bank mortgage officers cost up to $100,000 in 1980 and required a mainframe computer.

By 1988 the same logic was contained in a program that cost about $100 and ran on any desktop computer. That was bad news for people who counted on making money selling expert systems to banks.

Why did some users become disillusioned with expert systems?

As expert systems became cheap and widely available, their shortcomings became more obvious. Many users tried them out briefly and became disillusioned with the amount of work required to make them work.

An expert system mimics the knowledge base of a human expert. But a human expert spends thousands of hours accumulating knowledge. To imitate this expertise, somebody must enter thousands of rules into a computer, mostly of the form, "If you see X, do Y, but only if Z is true...."

After the labor-intensive process of entering rules into a computer, an expert system must be thoroughly debugged. Expert systems are notorious for occasionally producing silly or absurd recommendations.

A human expert must monitor the performance of an expert system to catch unanticipated problems. No doctor in his right mind would follow all the advice of the MYCIN system, blindly, without thinking independently about whether the diagnosis made sense and was safe and appropriate for the patient.

Researchers have a word for the tendency of programs to function only in a limited, predictable context. Such behavior is called brittle. A brittle program works only when problems are structured in a specified way.

What does it mean to say that expert systems were "brittle"?

A brittle system may "break" (produce incorrect results) as soon as odd or unanticipated situations are encountered. Expert systems are usually brittle until they have been field-tested in many different situations. That is the only way to detect unanticipated problems.

Add up all the practical complications, and the end result is that expert systems require human baby-sitters, even after having many thousands of rules entered into them. That does not make them useless, but it does mean that important decisions cannot be turned over to computers until large amounts of experience are accumulated.

SOAR: Universal Sub-Goaling

Early researchers emphasized general problem solving procedures, as embodied in the General Problem Solver (GPS). Then, in the early 1980s, the importance of domain-specific knowledge was discovered.

For a while it looked like specific knowledge would always be the key to successful problem solving. But that, too, was too simple an approach. It became clear that real-life problem solving would require both general principles and domain-specific knowledge.

How did the emphasis change, in problem solving research?

In the late 1980s a new attempt to combine existing approaches to problem solving was called SOAR (for State, Operator, and Result). The SOAR project involved dozens of cognitive scientists but is most identified with Allen Newell, one of the co-programmers of the General Problem Solver and a prominent cognitive scientist.

SOAR had three distinctive attributes:

1. It accepted the General Problem Solver approach as a general framework. Like the original GPS program, it represented problems as a space.

To solve a problem, the computer attempted to specify a series of steps that led from one state to another. This was done by selecting operations or steps that reduced the distance from the current state to the ultimate goal (hill-climbing).

2. SOAR used a library of if/then rules, like an expert system. When it found a familiar term at the if end of an equation, it executed the then part. Long-term memory, in SOAR, was a "mass of condition-action rules."

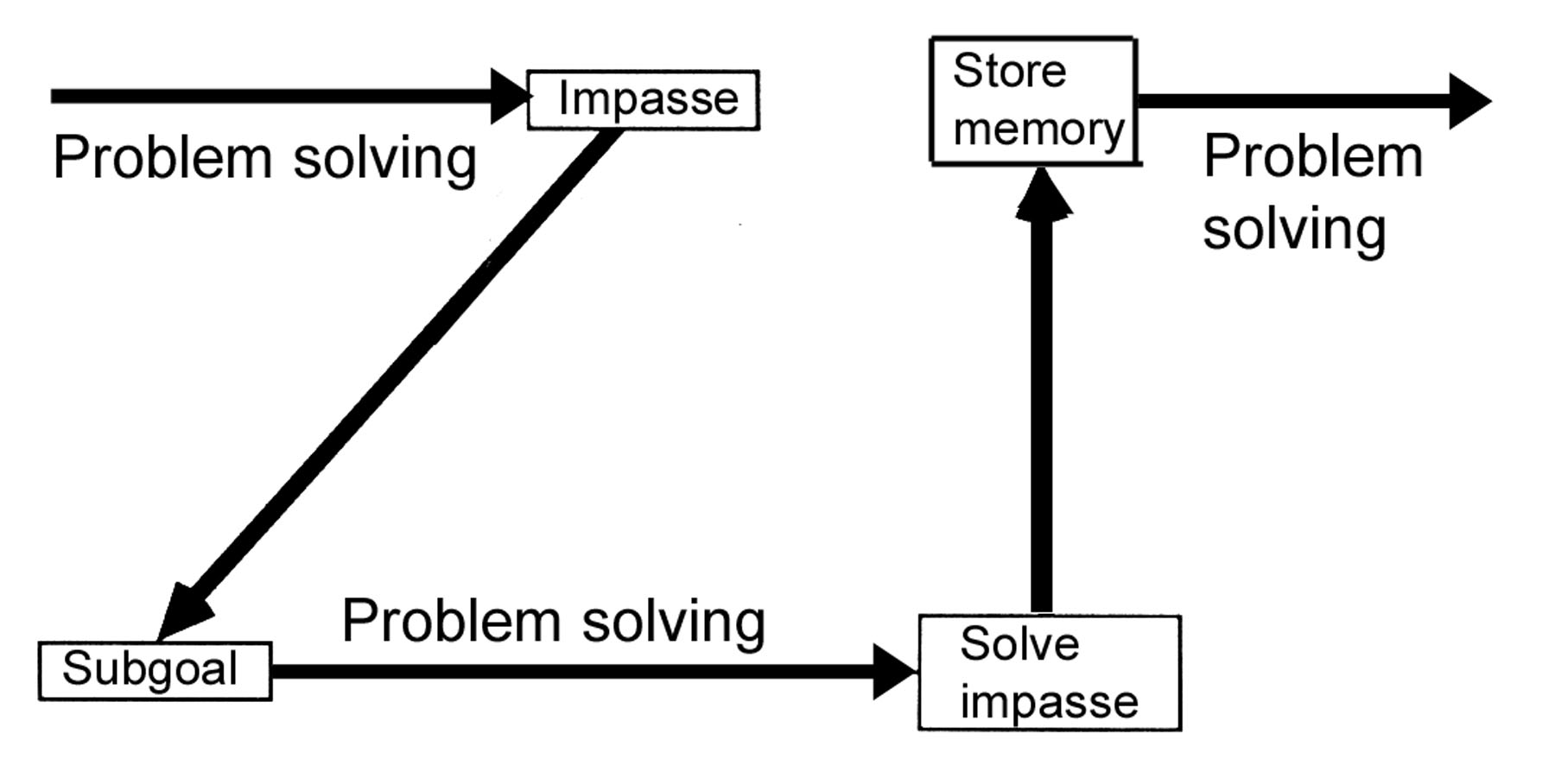

3. Whenever the program hit a snag or failed to locate a relevant if/then rule in its library, it treated this as a new problem. The snag or impasse became a "sub-goal" on the way to the main goal. (Waldrop, 1988)

Newell regarded universal sub-goaling as SOAR's greatest innovation. If SOAR ran into a problem solving a sub-goal, it made that problem another sub-goal. This was SOAR's way of dealing with unexpected difficulties. SOAR does not break when it hits an impasse, in theory; it treats the impasse as a new problem to be solved.

What is universal sub-goaling and what function does it serve in SOAR?

A sub-goaling process, as used in SOAR

Humans act like this. We create sub-goals many layers deep.

For example, suppose you need to write a term paper. That is the goal. In order to write the paper, you must come up with a topic. That is a sub-goal. To research the topic, you decide to use Google Scholar.

Now you must solve the problem of looking up your topic in Google Scholar. To do that, you decide to use a computer. So that becomes a new sub-goal: locate a computer, start a browser, and go to Google Scholar, then look up your topic.

But first, you must propel yourself out of your chair. That becomes an immediate sub-goal. So you adjust your posture a bit and push up with both hands...

You would execute many thousands of subgoals before actually gathering information related to your topic. And that would only be the beginning of the project of completing a term paper.

We discussed this same pattern when introducing the topic of routines in motor behavior. There sub-goals were called sub-routines, or sub-sub-routines (when a subgoal was embedded in another subgoal, as often happens).

This just goes to show that motor behavior is one example of problem solving in cogntion. The same basic patterns of activity are found in all problem solving.

What behavior by humans resembles the operation of SOAR?

Humans commonly intertwine hundreds of sub-goals, routinely, without thinking much about it, to accomplish even a simple task. We analyze what takes to reach a goal, arrange actions to get there, and unfold the actions in a precise sequence, all with amazing ease, thanks to years of practice from babyhood onward.

As an adult, a goal such as writing a term paper might require literally millions of sub-goals along the way. This is the ordinary stuff of human cognition. In imitating this mental capability, SOAR was probably on the right track toward human-like problem solving.

The main criticism of SOAR was that it did not do very well in handling unexpected sub-goals. When it hit a new difficulty, humans had to help out. Lindsay (1991) concluded, "SOAR has not yet shown it can avoid the charge of being programmed for each new task."

What was the main criticism of SOAR?

Newell died in 1992, a month after receiving national recognition for the SOAR project. The original SOAR is obsolete, but new versions are described on the SOAR project home page, and the SOAR architecture continues to inspire research and publications.

In the long run something like SOAR (using universal sub-goaling) must be programmed into computers, if they are to imitate human problem solving. The difficulty of making a computer do it has given cognitive scientists a fresh appreciation for the complexity of ordinary human cognitive processes.

---------------------

References:

Ericsson, K. A., Krampe, R. T., & Tesch-Romer, C. (1993). The role of deliberate practice in the acquisition of expert performance. Psychological Review, 100, 393-394.

Ericsson, K. A. (2012) Training history, deliberate practice and elite sports performance: an analysis in response to Tucker and Collins review–what makes champions? British Journal of Sports Medicine, 47. Retrieved from: https://bjsm.bmj.com/

Lindsay, R. K. (1991). Symbol-processing theories and the SOAR architecture. Psychological Science, 2, 294-302.

Waldrop, M. M. (1988). Soar: A unified theory of cognition? Science, 241, 296-298.

Write to Dr. Dewey at psywww@gmail.com.

Don't see what you need? Psych Web has over 1,000 pages, so it may be elsewhere on the site. Do a site-specific Google search using the box below.